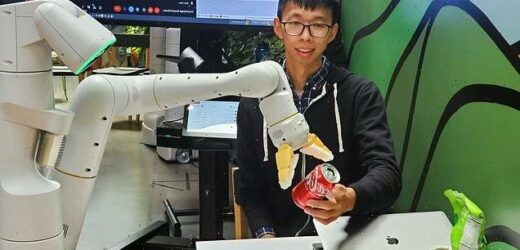

OK Google, get me a Coke: Tech giant shows off artificially intelligent robot waiter that can fetch drinks and clean surfaces in response to verbal commands

- Multipurpose robot demonstrated its ability to respond to indirect commands

- The bot uses Google’s AI technology to understand cues in natural language

- It then filters through a range of responses to choose the most appropriate one

- Most current robots are only able to understand simple and direct instructions

Sci-fi fans rejoice! We are one step closer to making C-3PO a reality, after a robot butler demonstrated its cleaning and drink-fetching skills.

The mechanical waiter was created by Alphabet, the parent company of Google, which is developing robots that can perform a range of tasks.

Google has recently installed advanced language skills into the fleet, known as ‘Everyday Robots’, so they are better able to understand natural verbal instructions.

Most robots are only able to interpret simple, to-the-point commands, like ‘bring me a glass of water’, however these are able to respond to indirect requests.

In Google’s example, an Everyday Robot prototype is asked ‘I spilled my drink, can you help?’, which it filters through its internal list of possible actions.

The bot finally interprets the question as ‘fetch me the sponge from the kitchen’ – a response that was not directly asked for, but is useful to the situation.

Google has recently installed advanced language skills into the robot fleet, known as ‘Everyday Robots’, so they are better able to understand natural verbal instructions

Most robots are only able to interpret simple, to-the-point commands, like ‘bring me a glass of water’, however these are able to respond to more vague requests

THE ‘EVERYDAY ROBOTS’ PROJECT

Alphabet’s ‘Everyday Robots’ project aims to develop robots capable of performing day-to-day tasks.

These could include sorting rubbish, wiping tables in cafes or tidying chairs in meeting rooms.

In the future they hope the robots will help society in more advanced ways, like by enabling older people to maintain their independence for longer.

They will use cameras and sophisticated machine learning models see and understand the world.

The bots will learn from human demonstration, the experiences of other robots and even from simulation in the Cloud.

Today, the team is testing its robots in Alphabet locations across Northern California.

While the multipurpose robots are still in development and not for sale, the integration of Google’s Large Language Model has increased their capabilities.

Dubbed PaLM-SayCan, the artificial intelligence (AI) technology draws understanding of the world from Wikipedia, social media and other web pages.

Similar AI underlies chatbots or virtual assistants, but has not been applied to robots this expansively before, Google said.

This is because ‘a language model generally does not interact with its environment nor observe the outcome of its responses’, the company stated in a blog post.

The tech giant wrote: ‘This can result in it generating instructions that may be illogical, impractical or unsafe for a robot to complete in a physical context.’

For example, with the example ‘I spilled my drink, can you help?’, a response may be ‘You could try using a vacuum cleaner’, which could be unsafe to execute.

The new robots can thus interpret naturally spoken commands, weigh possible actions against their capabilities and plan smaller steps to achieve the task.

A paper, published yesterday on arXiv, reveals that the robots plan correct responses to 101 instructions 84 per cent of the time.

They also execute them successfully 74 percent of the time, which is an improvement on their 61 per cent success rate prior to their new language model.

The robots are still limited by their number of possible actions; a common roadblock in the development multi-functional home robots.

They are also not currently able to be summoned by the phrase ‘OK, Google’ familiar to their product users.

While Google says it is pursuing development responsibly, there could be concerns that the bots will become surveillance machines, or could being equipped with chat technology that can give offensive responses.

‘It’s going to take a while before we can really have a firm grasp on the direct commercial impact,’ said Vincent Vanhoucke, senior director for Google’s robotics research.

For now, the prototypes will continue to grab snacks and drinks for Google employees.

TESLA’S HUMANOID ROBOT: OPTIMUS

This week, Elon Musk revealed new details about Tesla’s humanoid robot called Optimus.

Originally called Tesla Bot, plans for the robot were announced at AI Day in August 2021.

The robot would stand 5’8 and weigh 125 pounds, and will be able to move at 5mph and carry 45 pounds.

Tesla Optimus will have human-like hands and feet and sensors for eyes.

The bot will be ‘friendly’ to humans, but people will also be able to outrun and overpower the robots if needed.

It will be able to perform tasks that are repetitive or boring, but could also serve as a companion.

‘In the future, a home robot may be cheaper than a car. Perhaps in less than a decade, people will be able to buy a robot for their parents as a birthday gift,’ Musk said.

Tesla is set to unveil a prototype of the robot September 30 at AI Day.

‘Tesla Bots are initially positioned to replace people in repetitive, boring, and dangerous tasks,’ Elon Musk explained in a recent essay

Source: Read Full Article